Prompt chaining is a technique to extract better performance from an LLM by breaking a task into subtasks and thus making each one simpler to complete. Furthermore, it's a great way to take a repetitive conversation and turn into an AI app that is consistent across sessions and can be shared with your team without copy-pasting prompts. With Anthropic's launch of Claude 2 we decided to take a look at how we can leverage prompt chaining with the new model to maximise its performance.

There are different scenarios where prompt chaining can come in handy, we'll start off by taking a look at the ones recommended by Anthropic in their documentation and showing how we can use it without the API, requiring code or manually copy-pasting each section in a conversation.

1. Improving document answering

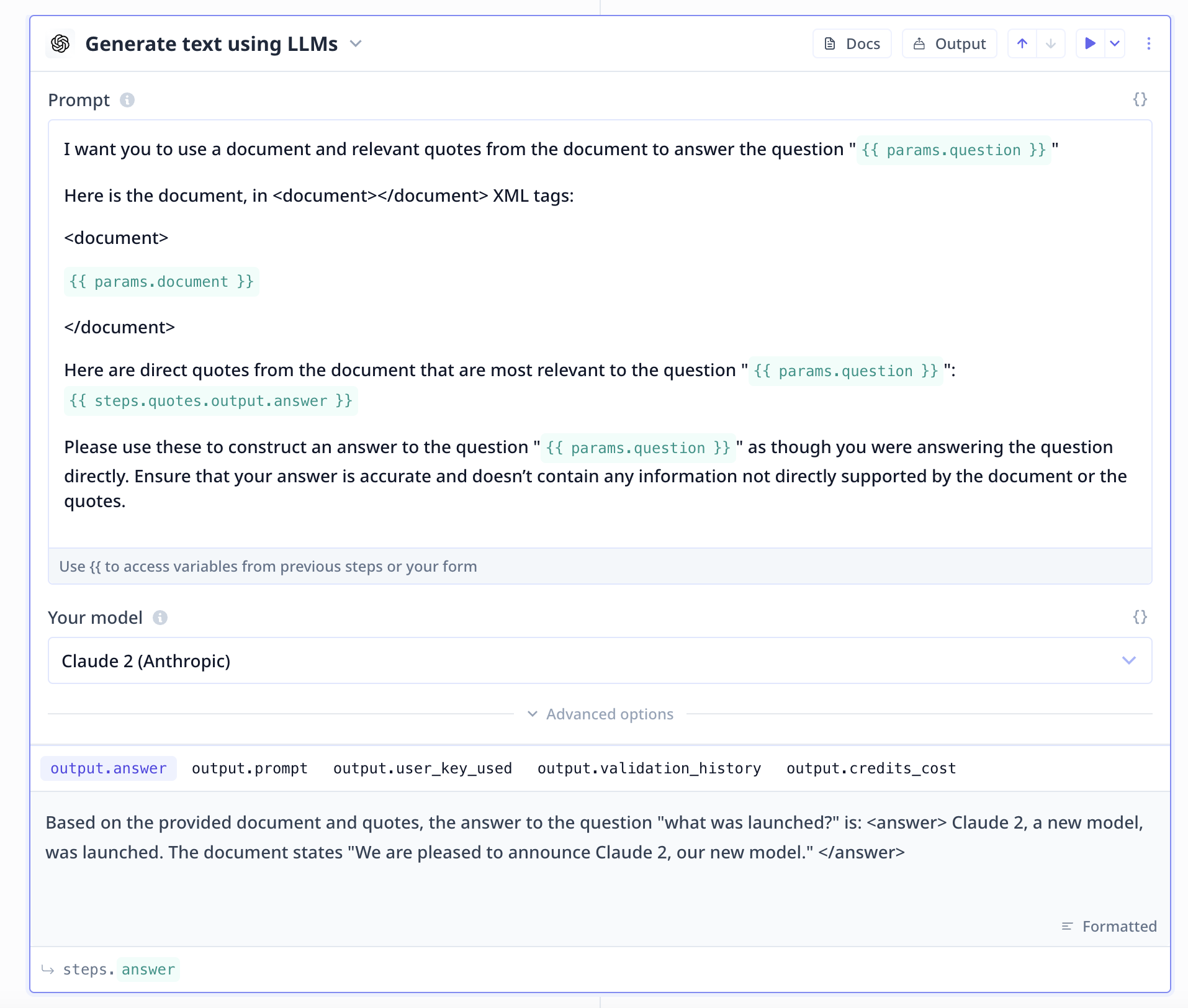

Oftentimes when using LLMs, we want it to extract answers from a long piece of context. This is a good candidate for chaining as we can improve the results by splitting it into steps:

- Extract the quotes from the document that are relevant to the question

- Answer the question by using the document and the relevant quotes as context

This can improve performance as it gives the LLM better context by curating the data further before asking the question. You can clone the template directly from here and try it yourself. Compare how it performs to a single prompt - the longer the document used as an input the more prone to error a single prompt is.

2. Self-evaluating output

Prompt chaining can be quite useful for evaluating whether the output of a previous prompt was accurate. This can be used to either pick up mistakes in the answer or identify if sections were missed.

An example provided by Anthropic is how we can use a follow-up prompt to identify if any error were missed when evaluating an the grammatical correctness of an article here. You can view the prompts here and if you'd like to try it without code, you can clone the template here.

3. Turning ad-hoc assistant conversations into apps

The other major benefit of prompt chaining is that you can take sequences of prompts you might usually do ad-hoc with assistants like Claude and turn them into shareable LLM apps. These apps can then be used to have a consistent output each time you run them and save you time not having to copy-paste prompts back and forth as you build out the use-case.

For example, if you have a workflow of prompts that goes through improving content or generating sales messaging - you can turn them into an LLM app using Relevance AI using the exact same technique. Relevance AI is a low-code builder for AI apps and automations that gives you production-ready, reliable apps based on your prompts. You can even connect it to third-party services like Google search and your own data for additional context.

If you'd like to learn more about Relevance AI, you can visit here or read our blog post about how we automate our own sales responses using an app that takes our past conversations as context and takes LinkedIn data to make it personalised.